WHATS IMPORTANT

📍 2021 AI Index released

The Stanford Institute for Human-Centered Artificial Intelligence (HAI) released the newest edition of their annual report on AI impact and progress. Explore the full report on the HAI Website. Looking back on 2020, they find that:

- AI hiring kept rising globally despite the pandemic.

- The USA, however, saw its first ever drop in AI hiring, indicating a maturing of the industry.

- China overtook the USA in AI journal citations.

Stanford also updated their Global AI Vibrancy Tool with interactive visualizations that allow cross-country comparison for up to 26 countries in AI research, economy, and inclusion.

📍 OpenAI's CLIP has Multimodal Neurons

"Fifteen years ago [neuroscientists] discovered that the human brain possesses multimodal neurons. These neurons respond to clusters of abstract concepts centered around a common high-level theme, rather than any specific visual feature. The most famous of these was the "Halle Berry" neuron, a neuron featured in both Scientific American and The New York Times, that responds to photographs, sketches, and the text "Halle Berry" (but not other names). CLIP has these multimodal neurons too!"

In our January Newsletter we highlighted the importance of OpenAI's CLIP. Their research team now published their detailed dissection of the model, and present a Spider-Man Neuron that responds to an image of a spider, an image of the text "spider," and the comic book character "Spider-Man" both in costume and illustrated. Read more on the OpenAI Blog.

THINGS WE FOUND WORTH SHARING

🎨 1. ML Art – Google DeepMind's BigGAN can create 'realistic' images from random noise. OpenAI's CLIP, on the other hand, is perfect to match an image with given text descriptions. In January Ryan Murdock, a graduate student from Utah, combined CLIP with BigGAN to create a Text-to-Image generator called The Big Sleep. The system iterates through images generated by BigGAN and tries to optimize the CLIP scoring with a given input description.

The author published a Colab Notebook with a code implementation so you can try it out yourself.

Just a few days later, Big Sleep was operationalized into a Poem-to-Video generator. Will Stedden published Story2Hallucination that creates multiple images based on a series of descriptions. By feeding the system poetry, he created mesmerizing visualizations for William Wordsworth's poems. Others have used Story2Hallucination to create music videos of their favourite acts, such as recently separated Daft Punk. Try it yourself with this Colab Notebook.

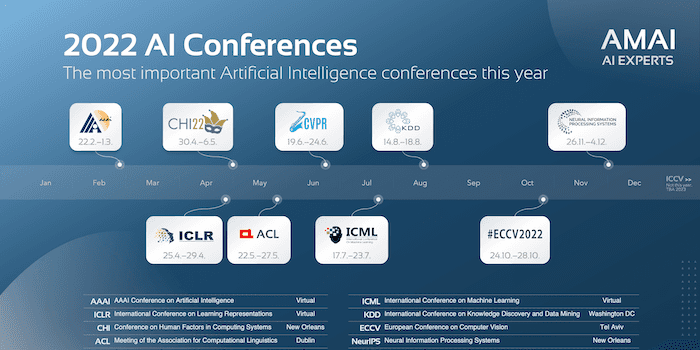

🗞 2. News – ICLR, one of the most important AI conferences, released the first list of invited speakers. Among them is Timnit Gebru, recently fired from Google for raising discrimination issues. See the list of speakers in the ICLR blog.

📄 3. Paper – A recent study by Google researchers compared Transformers Modifications with the original from 2017. In their paper called "Do Transformer Modifications Transfer Across Implementations and Applications?" (arXiv) they find that "Surprisingly [...] most modifications do not meaningfully improve performance". Read a summary of their study on Synced.

💙 4. We are hiring! – We are looking for German-speaking Data Engineers. Check out the positions in our Enterprise AI team on the AMAI Careers page. See you soon!

💡 5. Use Case – MyHeritage, an online platform that lets you explore your genealogy, brought old family photos back to life. Thousands of users used DeepNostalgia to turn old black and white pictures of great grandparents into live photos. A excellent marketing stunt that certainly earned MyHeritage much publicity. Under the Twitter Hashtag #DeepNostalgia you can find impressive results and astonished user reactions. The technology was provided by the AI face platform of D-ID, who go into further detail in their blog.

🎙 6. Interview – DeepMind software engineer Julian Schrittweiser appears in the Stack Overflow Podcast to discuss their recent MuZero paper and what the lab is working on next. Listen in on the Stackoverflow Blog.

👁 7. Miscellaneous – The subreddit r/MachineLearning recently became the place of discussion about unreproducible research papers. A researcher complained that multiple of their attempts to reproduce results from prominent machine learning papers had failed. Others on the platform went on to share similar stories and posted more such papers.

Days later, the original poster ContributionSecure14 released Papers Without Code to collect a centralized list of papers that other researchers fail to reproduce. While some authors withhold their code implementations for valid reasons, such as protecting user data or intellectual property, Papers Without Code wants to save researchers time and disincentivize unreproducible papers. Read more on The Next Web.

📄 8. Paper – Currently, image recognition systems require large amounts of labelled pictures. Facebook AI's chief scientist Yann LeCun hopes that in the "Long term, progress in AI will come from programs that just watch videos all day and learn like a baby." With a new approach called SEER, short for SElf-supERvised, Facebook wants to start a "New Era for Computer Vision".

Read more on WIRED and the Facebook AI Blog. The paper "Self-supervised Pretraining of Visual Features in the Wild" is available on arXiv.

UPCOMING EVENTS

🇩🇪 March 25 (online, 10:00 CET) – Fallbeispiel einer KI im Mittelstand:

KI Lab Nordschwarzwald - Unternehmensinterne Suchmaschine -

Die Menge der unternehmensinternen Daten wächst täglich. Mitarbeiter verbringen viele unnötige Minuten damit, Vorgänge, Betriebsanleitungen, Bedienanweisungen, Prozessdokumentationen, Kundenspezifika oder ähnliche Informationen in den unternehmenseigenen Ablagesystemen zu suchen.

Die Ineffizienz bei der Suche nach relevanten Informationen nimmt dabei stetig mit der Datenmenge zu. Wie man hier Abhilfe schaffen kann, zeigt Ihnen Woldemar Metzler von der AMAI GmbH anhand konkreter Anwendungsbeispiele von unternehmensinternen Suchmaschinen.

Ein solches Projekt bietet oft einen perfekten Einstieg in die Welt der KI – gerade auch für KMU, da der Umsetzungsaufwand überschaubar und die Ergebnisse überzeugend sind. – Registrieren Sie sich hier Sie auf podio.com.

📅 April 12 (online, 16:30 CET) – NVIDIA CEO & Godfathers of AI

To kick off the GTC Conference (April 12-16), Nvidia founder Jensen Huang invites Yoshua Bengio, Geoffrey Hinton and Yann LeCun for a joint keynote. Registration is free and is not required to view the keynote. – The Livestream will be available on nvidia.com.