WHAT'S IMPORTANT

📍 OpenAI shared two consequential papers combining language and computer vision, DALL·E and CLIP:

DALL·E

DALL·E is a 12-billion parameter GPT-3 that generates images from text queries, named after Pixar’s WALL·E and Salvador Dalí. The generative model can manipulate and rearrange objects in a generated image and even create things that don’t exist. For example an armchair in the shape of an avocado.

- 🤖 Make sure to try the online demo in the OpenAI Blog (openai.com)

- ⚡️ Watch a short video summary by Two Minute Papers (8 min on youtube.com)

- 🐌 If you have the time, follow Yannic Kilcher for an extensive look through the accompanying paper (55 min on youtube.com)

CLIP

The second paper is CLIP, short for Contrastive Language–Image Pre-training, introducing a zero-shot image classifier. While previous supervised classification models show great performance with classes they already know, they don't generalize very well. If you show them an image of an object that wasn't in the training dataset, they generally don't perform any better than if they guessed randomly. CLIP, on the other hand, can identify an incredible range of things it has never seen before.

- 🤖 Read the official blog publication by OpenAI (openai.com)

- 👩💻 Try CLIP yourself following this guide from Roboflow (roboflow.com)

- 🐌 Follow Yannic Kilcher, the 1-man paper-discussion-group, who goes through the paper in depth (50 min on youtube.com)

THINGS WE FOUND WORTH SHARING

🎨 1. Showcase – How Bad Is Your music taste? A "sophisticated AI" judges your awful taste in music based on your Spotify listening history (pudding.cool).

👩💻 2. Code & Tutorials – Facebook open-sourced AI models that predict whether a Covid-19 patient’s condition will deteriorate. With that they hope to help hospitals plan for resource allocation (ai.facebook.com).

🎙 3. Interview – Geoffrey Hinton, one of the "Godfathers of AI", reflects on his 2020 research in the Eye on A.I. podcast, hosted by longtime New York Times correspondent Craig S. Smith (50 min on youtube.com).

🎨 4. Showcase – ML Engineers from the Google Cloud team showcase their no-code cloud tools by training an AI pastry chef. They feed the model cake and cookie recipes and teach it how to write recipes on its own. Afterwards they let it up come up with a cookie-cake hybrid called the “cakie”. See the baking results in the video below (cloud.google.com).

📚 5. Papers – SuperGLUE is currently among the most challenging benchmarks for evaluating Natural Language Understanding (NLU) models. Only 16 months after the introduction of the SuperGLUE benchmark, researchers at Microsoft have solved the challenging test. The 1.5-billion-parameter model called DeBERTa (paper on arxiv.org) surpasses human performance. Read more on the Microsoft Blog (microsoft.com)

🎓 6. Education – The PyTorch Transformer documentation does not explain how to do inference. For that reason Sergey Karayev of Turnitin wrote an easy-to-follow Transformer Tutorial in Colab both with the encoder and decoder parts using PyTorch-Lightning (colab.research.google.com).

📚 7. Papers – New SOTA (state-of-the-art) performance was reached in the Imagenet Image Classification task, breaking 90% top-1 accuracy for the first time. Researchers from Google Brain trained an EfficientNet-L2 using Meta Pseudo Labels, a semi-supervised learning method (arxiv.org).

🔔 8. In Case You Missed It – The AI Consulting team from AMAI launched a New Website. An updated careers page is accompanied by a newly started AI Expert developer blog.

UPCOMING EVENTS

💙 January 21, 17:00 CET (online, German) – KI im Unternehmen: Wie und wo fange ich an? Our colleague Woldemar Metzler sheds light on the particular challenges German "Mittelständler" companies face when approaching AI. He shows first steps SMEs can take to increase competitiveness in their future with Artificial Intelligence applications. – Free admission here on digitalhub-nordschwarzwald.de.

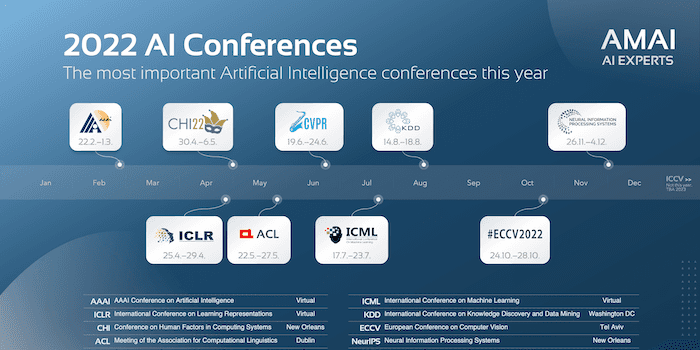

📅 February 2-9 (online) – The first important AI conference of the year is coming up. The 35th AAAI, an annual conference by the Association for the Advancement of Artificial Intelligence will be hosted virtually. – Participate on aaai.org.